Back in October 2013, the relative early days of WebRTC, I set out to get a better understanding of the getUserMedia API and camera constraints in one of my first and most popular posts. I discovered that working with getUserMedia constraints was not all that straight forward. A year later

Tag: getUserMedia

I interviewed W3C WebRTC editor Dan Burnett on ORTC, the general progress of WebRTC, and what we can expect next:

https://webrtchacks.com/qa-w3c-editor-dan-burnett/

I continue to investigate my question - how do WebRTC getUserMedia constraints work? In this webrtcHacks post I update my resolution scanning code and re-run my previous analysis to empirically determine how getUserMedia constraints are handled.

To learn more read here https://webrtchacks.com/video-constraints-2/

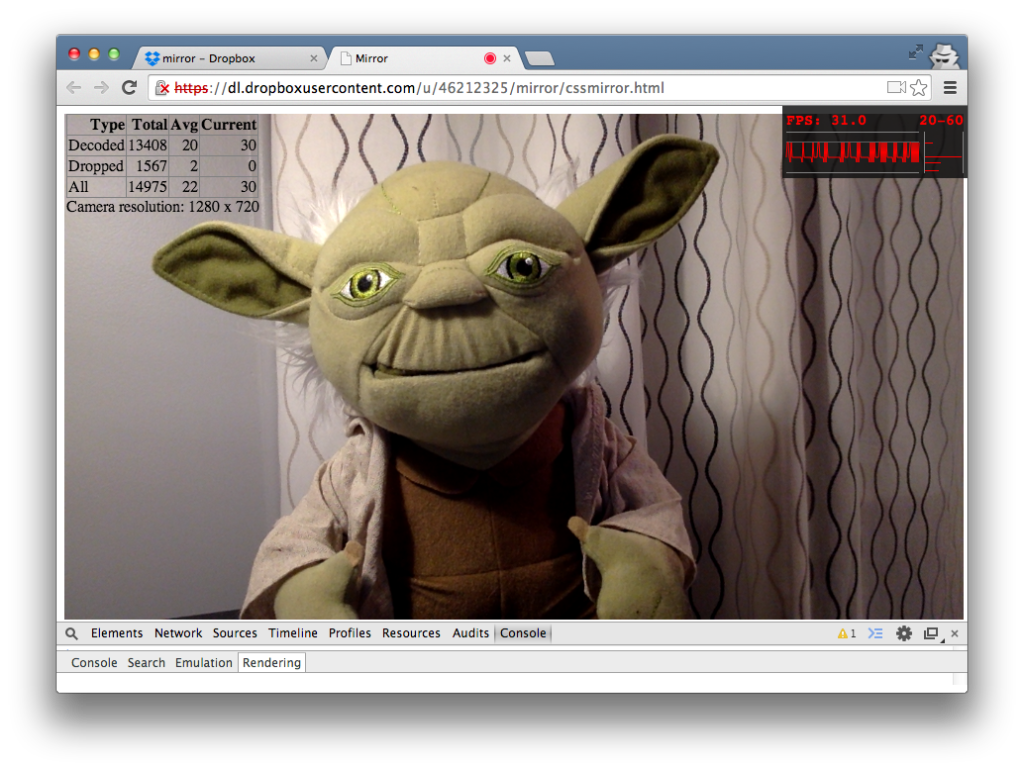

Getting started with with getUserMedia by creating a simple mirror application and using canvas and CSS techniques.

I also did some experiments with framerates and found some non-obvious results.

Link: https://webrtchacks.com/mirror-framerate/.

I did some experiments with WebRTC's getUserMedia() constraints? This lead me down a rat hole but I had some interesting results.

Highlights include:

- Non-obvious Findings

- Mandatory constraints are really suggestions.

- Kill the stream before applying new constraints.

- Use https for a better user experience.

- Don’t bother changing constraints in